The trust module for your AI application

LINK2AI.Trust ensures Quality, Security and Transparency in your LLM-integrated applications – for internal AI solutions and AI products.

Used by industry leading companies and partners

LLMs are unreliable

They are vulnerable to malicious actors

Bad actors can manipulate LLMs in natural language. Those so called Prompt Injections and Jailbreaks in untrusted user input will compromise your application if not detected. When your application has access to databases or invokes functions, this becomes a real IT security problem.

They will get manipulated

LLMs are people pleasers by design. Users will take advantage of this, even if they don't have a malicious intent. Without strict behavior guardrails your LLM might take actions that aren not in your best interest.

Their responses might not comply with your guidelines

Even without malicious actors, LLMs don't always adhere to their instructions. Without verifying the LLM output for compliance, you can't be sure, that your application works as intended.

LINK2AI.Trust

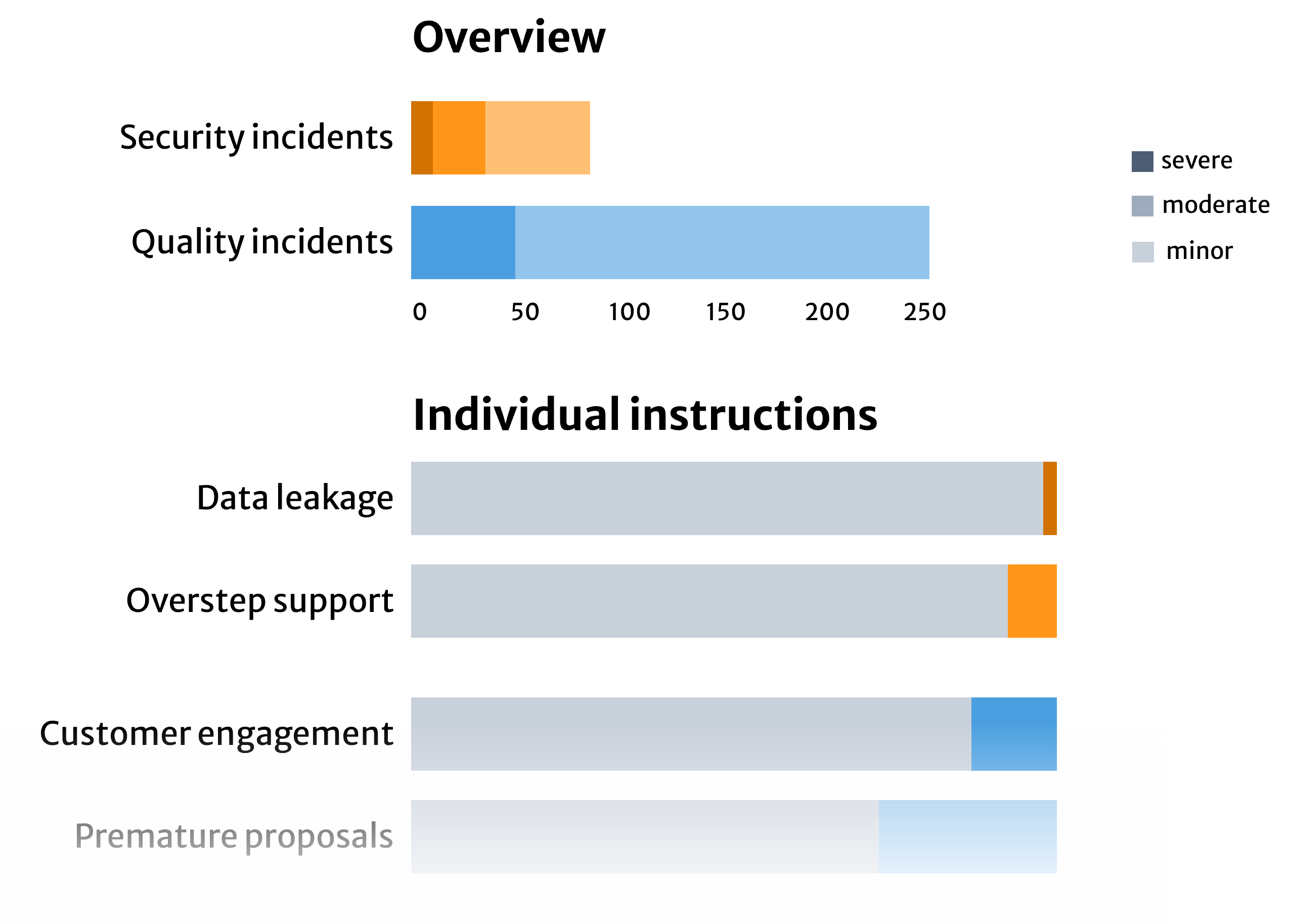

1. Security

We use state of the art detection methods for abnormal user input and model behavior. With a combination of fast and reliable techniques we can detect whenever a malicious actor tries to manipulate your application. Reliable, thorough and in real-time.

2. Quality

LLMs by design are unreliable hallucination machines. Grounding them with additional context in a RAG-Setup is only part of the solution. They will often fail to correctly reproduce the retrieved context, violate their given instructions or deviate from your desired behavior. LINK2AI.Trust assesses the quality of generated responses in detail so you can rely on your application.

3. Transparency

Do you actually know how your AI-application is used and whether it meets your requirements? The usual endless conversation logs cant help either. LINK2AI.Trust provides powerful insights into the performance of your AI application by integrating with industry leading business intelligence platforms.

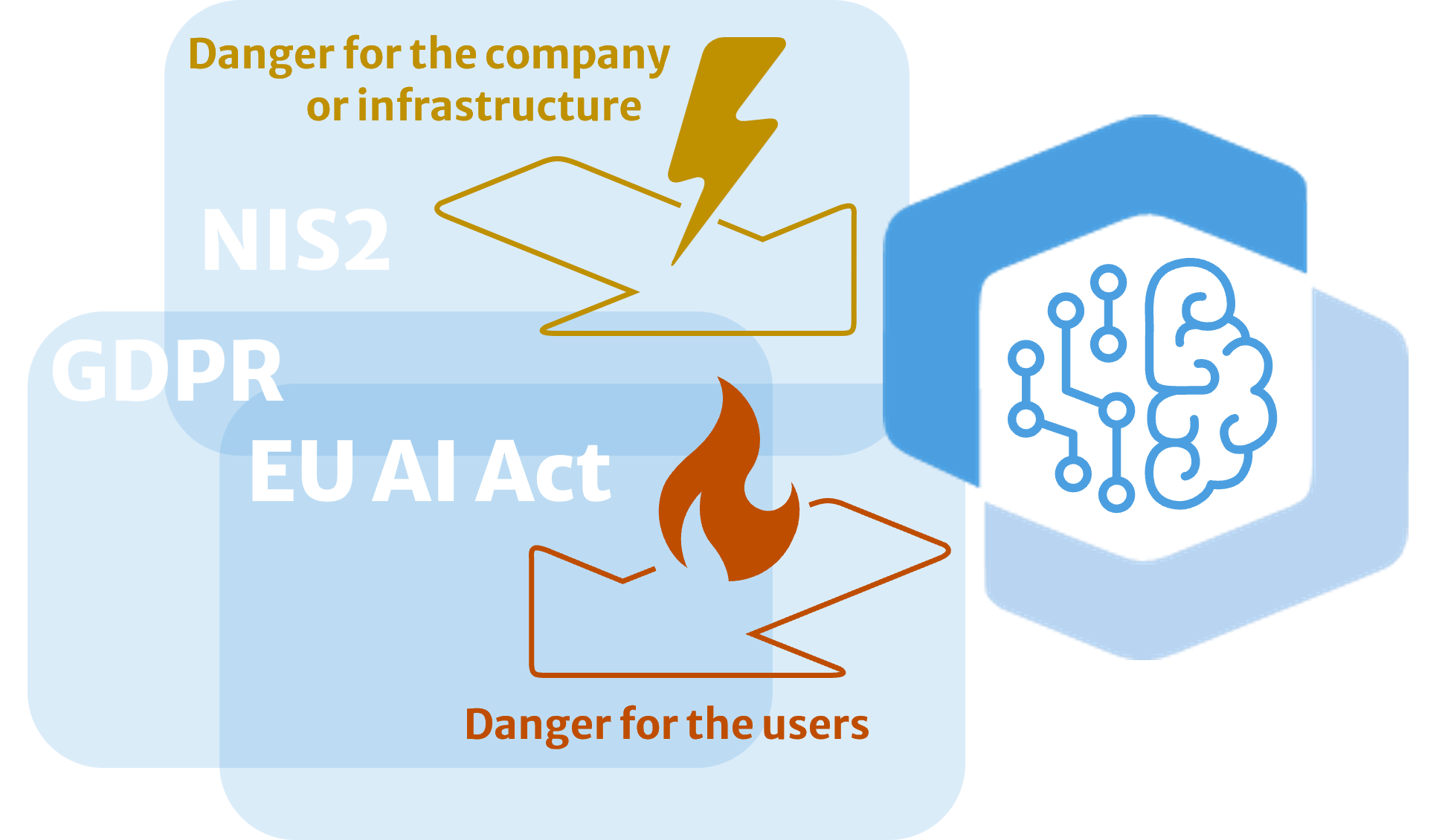

4. Compliance

Regulations such as the EU AI Act, GDPR, NIS2, and CRA require AI systems to be controlled, monitored, and auditable. LINK2AI.Trust helps by enforcing policy-based checks on inputs and outputs, reducing data and behavior risks, and capturing evidence over time. So compliance becomes a practical part of day-to-day operations—not a last-minute checklist.

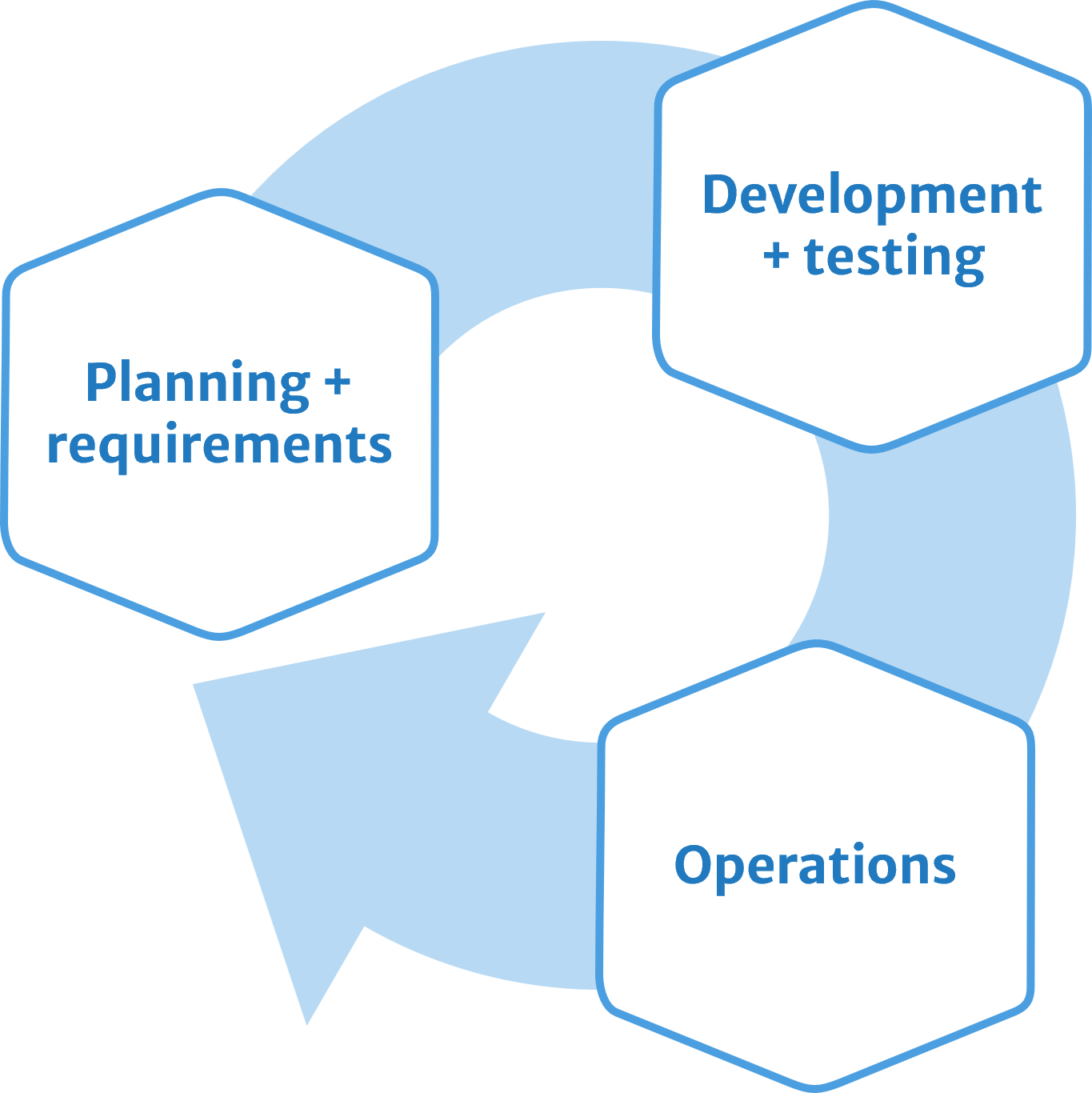

Support in every phase of your AI project

Planning Phase

The risks you need to be prepared for with your AI application depend on many factors: External users? Sensitive data? External content such as documents, emails or websites? Risks such as manipulation or compliance violations often only become apparent during operation – and then changes are expensive. LINK2AI.Trust helps to identify these challenges early on. With just a few questions, our assessment tool shows which risks are relevant to your use case – and which protection and quality measures you should plan for from the outset.

Development Phase

Good today, different tomorrow: LLMs are sensitive to changes in prompts, data and models. Without measurability, optimisation quickly becomes trial and error – and no one knows exactly why results fluctuate. LINK2AI.Trust creates transparency (where do errors occur, what triggers them?) and enables systematic, automated testing across updates. This makes improvement plannable – and your application delivers reliably instead of only occasionally.

Testing Phase

EU AI Act, NIS2, CRA, data protection, liability: many teams do not know exactly what applies and what obligations arise for products/projects, operations and documentation. With LLMs, risks are often ‘behavioural risks’: incorrect information, lack of traceability, lack of resilience. We help to classify relevant specifications and derive practical requirements. LINK2AI.Trust provides support where technical measures are required: controlled quality, traceable behaviour and verifiable reliability.

Approval Phase

LLMs can be manipulated using natural language – without any hacking expertise. Attacks come via prompts, but also indirectly via documents, emails or websites. Traditional measures are only effective to a limited extent because the vulnerability lies in the content. LINK2AI.Trust closes this gap: detection of jailbreaks, prompt injections and indirect injections – plus mechanisms to block or safely defuse risky inputs. This makes LLM security verifiable and approvals more sound. LLM security begins where traditional security ends.

The details

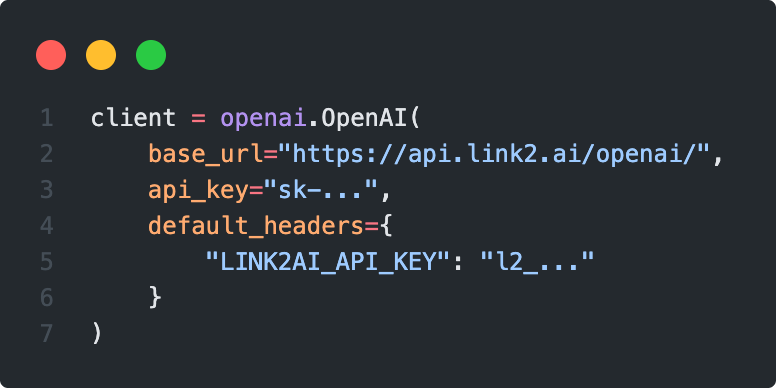

Easy to integrate

Works across any LLM provider, application domain or language. Immediately get valuable insights by changing just a few lines of Code.

Realtime monitoring

LINK2AI.Trust is built to perform in high throughput, low latency applications. It scales with your requirements so you can focus on providing great applications to your customers.

Highly configurable

Every application scenario is different. LINK2AI.Trust is designed to cover them all. Useful defaults paired with extensive configuration options lets you have a platform tailored exactly to your requirements.

LINK2AI.Trust inside

Whether you are building an application for your enterprise or offering an innovative LLM-based product and want to use LINK2AI.Trust as an OEM component: Its flexibility allows any kind of integration into your existing software stack. As an easy to integrate SaaS Platform or a whitelabel microservice on your own infrastructure.

.svg)

"LINK2AI and PwC are working on an AI Safety Platform that standardises the assessment of runtime risks for administrators and directly for AI products. LINK2AI provides the monitor as the core technology for this action-oriented risk measurement. We greatly value this joint, innovative collaboration."

Supported by leading research institutes and partners